Written By Shubham Arora

Published By: Shubham Arora | Published: Jan 27, 2026, 11:10 AM (IST)

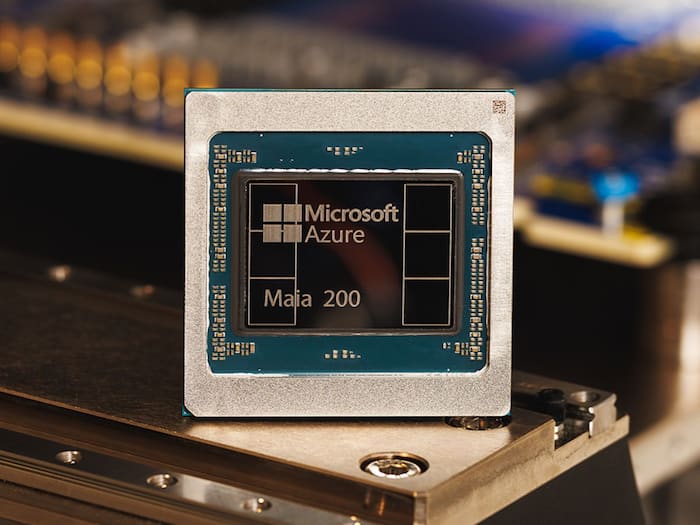

Microsoft has launched a new in-house AI chip called the Maia 200, designed specifically to speed up AI inference workloads. Inference is the stage where trained AI models generate responses, and it has become one of the most expensive parts of running large-scale AI systems. With Maia 200, Microsoft is aiming to reduce both cost and latency for these workloads across its cloud services. Also Read: Free Apps Worth Installing on Every Phone

Maia 200 is not a general-purpose AI chip. It has been built with one clear focus – running large AI models efficiently once they are already trained. Microsoft says the chip is optimised for modern models that rely on low-precision computing, which is now common for inference. Also Read: Grok image output raises alarm: 23,000 sexualised child images in 11 days, says report

The chip is manufactured using TSMC’s 3nm process and packs more than 140 billion transistors. It supports FP4 and FP8 precision, which are widely used for faster and cheaper inference. According to Microsoft, this allows Maia 200 to deliver higher throughput while keeping power usage under control, even when running very large models.

Maia 200 comes with 216GB of HBM3e memory, offering very high bandwidth, along with a large pool of on-chip SRAM. This setup is meant to reduce data bottlenecks, which are a common issue when models scale up in size.

At the system level, Microsoft has built Maia 200 around a scale-up network that uses standard Ethernet rather than proprietary interconnects. Multiple accelerators can be linked together, allowing large inference clusters to run efficiently without adding unnecessary cost or complexity.

Maia 200 is already being deployed in Microsoft’s Azure data centres in the US, starting with the US Central region. More regions are expected to follow. The chip will be used to run a range of workloads, including models offered through Azure AI services, Microsoft 365 Copilot, and newer OpenAI models hosted on Azure.

Microsoft’s internal teams are also using Maia 200 for tasks such as synthetic data generation and reinforcement learning, which support the development of future AI models.

Alongside the hardware, Microsoft is previewing a Maia software development kit. The SDK includes support for PyTorch, a Triton compiler, and optimised libraries to help developers run models on Maia 200 with minimal changes. The idea is to make it easier to move workloads across different types of AI hardware within Azure.

With Maia 200, Microsoft is clearly pushing further into custom silicon, following a path already taken by other large cloud providers, as the cost of running AI at scale continues to grow.