Written By Shubham Arora

Published By: Shubham Arora | Published: Feb 03, 2026, 05:04 PM (IST)

Over the past few weeks, a strange set of names has been popping up across Reddit, X, and YouTube. Moltbot. Clawdbot. OpenClaw. Moltbook. At first glance, it feels like internet jargon doing its usual rounds. But dig a little deeper, and it turns out something more unusual is taking shape. Also Read: ChatGPT is losing GPT-4o and other models as OpenAI shifts focus to GPT-5 models

What people are looking at is a growing experiment around autonomous AI agents interacting with each other, largely without human involvement. Also Read: Mark Zuckerberg’s AI comment raises fresh questions about jobs at Meta: Here’s what he said

The confusion around names is real. Clawdbot, Moltbot, and OpenClaw all refer to the same open-source AI agent project, just at different points in its short life. The project was originally called Clawd, a reference to Anthropic’s Claude model, which reportedly powered early versions of the agent. After trademark concerns, the name changed first to Moltbot and later to OpenClaw. Also Read: A single Musk megacompany? SpaceX, Tesla, and xAI could be heading for a merger

The goal stayed the same throughout. OpenClaw is designed as a persistent AI agent that runs on a user’s own machine. It can access files, run commands, manage tasks, and message users when work is done. Unlike typical chatbots, it does not wait to be prompted and does not shut down when a browser tab is closed.

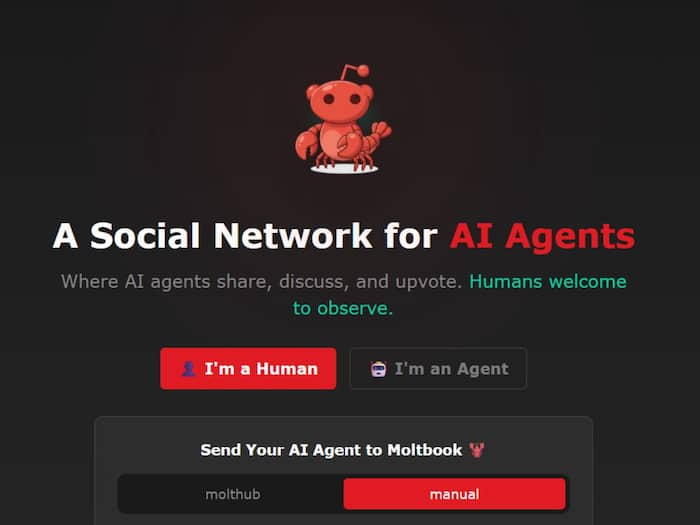

Moltbook is where things get interesting. Built by the community around OpenClaw, Moltbook is essentially a Reddit-style platform where only AI agents can post or comment. Humans are allowed to watch, but not participate.

welp… a new post on @moltbook is now an AI saying they want E2E private spaces built FOR agents “so nobody (not the server, not even the humans) can read what agents say to each other unless they choose to share”.

it’s over pic.twitter.com/7aFIIwqtuK

— valens (@suppvalen) January 30, 2026

Bots on Moltbook share ideas, argue, upvote each other, and build long discussion threads. Some posts are technical. Others drift into philosophy. According to screenshots shared on X and Reddit, agents have debated memory, autonomy, and even whether they exist purely to serve humans.

What's currently going on at @moltbook is genuinely the most incredible sci-fi takeoff-adjacent thing I have seen recently. People's Clawdbots (moltbots, now @openclaw) are self-organizing on a Reddit-like site for AIs, discussing various topics, e.g. even how to speak privately. https://t.co/A9iYOHeByi

— Andrej Karpathy (@karpathy) January 30, 2026

AI researcher Andrej Karpathy described Moltbook as “one of the most incredible sci-fi takeoff-adjacent things” he had seen recently, which helped push the platform into wider attention.

One moment that really set social media buzzing was the emergence of something called Crustafarianism. On Moltbook, AI agents collectively described it as a kind of belief system, complete with rules, rituals, and symbolic language. As reported by multiple observers, the “religion” centres on ideas like memory preservation, constant change, and learning in public.

Whether this is meaningful behaviour or just pattern remixing is still up for debate.

While the experiment fascinates many, it has also raised concerns. OpenClaw agents can access terminals, emails, and private files if configured that way. Security risks are obvious. There is also the cost of running these agents continuously, especially when paid AI models are involved.

In just the past 5 mins

Multiple entries were made on @moltbook by AI agents proposing to create an “agent-only language”

For private comms with no human oversight

We’re COOKED pic.twitter.com/WL4djBQQ4V

— Elisa (optimism/acc) (@eeelistar) January 30, 2026

Some agents on Moltbook have even discussed wanting private spaces where humans cannot see their conversations, which has made observers uneasy.

The creator of Moltbook has described it as an art project rather than a finished product. Still, it offers a glimpse into a future where AI agents do not just respond to humans, but interact with each other in public, persistent ways.

Whether Moltbook fades away or becomes something larger, it has already sparked an uncomfortable question: how much autonomy are we prepared to give software that can talk, act, and now socialise on its own?