Written By Deepti Ratnam

Edited By: Deepti Ratnam | Published By: Deepti Ratnam | Published: Feb 11, 2026, 10:46 AM (IST)

The concern regarding the spread of deepfakes and AI-made content has reached a challenging height, wherein the Indian government has started taking major steps to curb AI-generated content on social media platforms. In a notable step, the central government has updated its existing IT rules to bring synthetic content under regulation. The changes will be implemented to reduce the misuse of the misleading content generated by AI. Additionally, the government is also trying to stop misinformation and make platforms act faster when harmful content appears online.

The amendment released by central government notified on February 10 and will take effect from February 20 in the country. The amendment says that the Information Technology Intermediary Rules will be modified. To recall, these rules were first introduced in 2021. This will be the first time when AI-generated content will be formally under scrutiny, recognized, and regulated under India law.

Notably, the rules will be implied to all online platforms, including Facebook, Instagram, Telegram, WhatsApp, and more that host or publish AI-generated content.

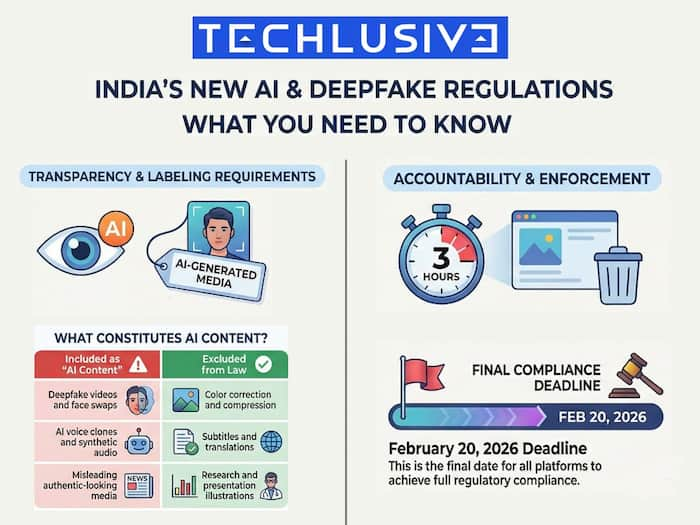

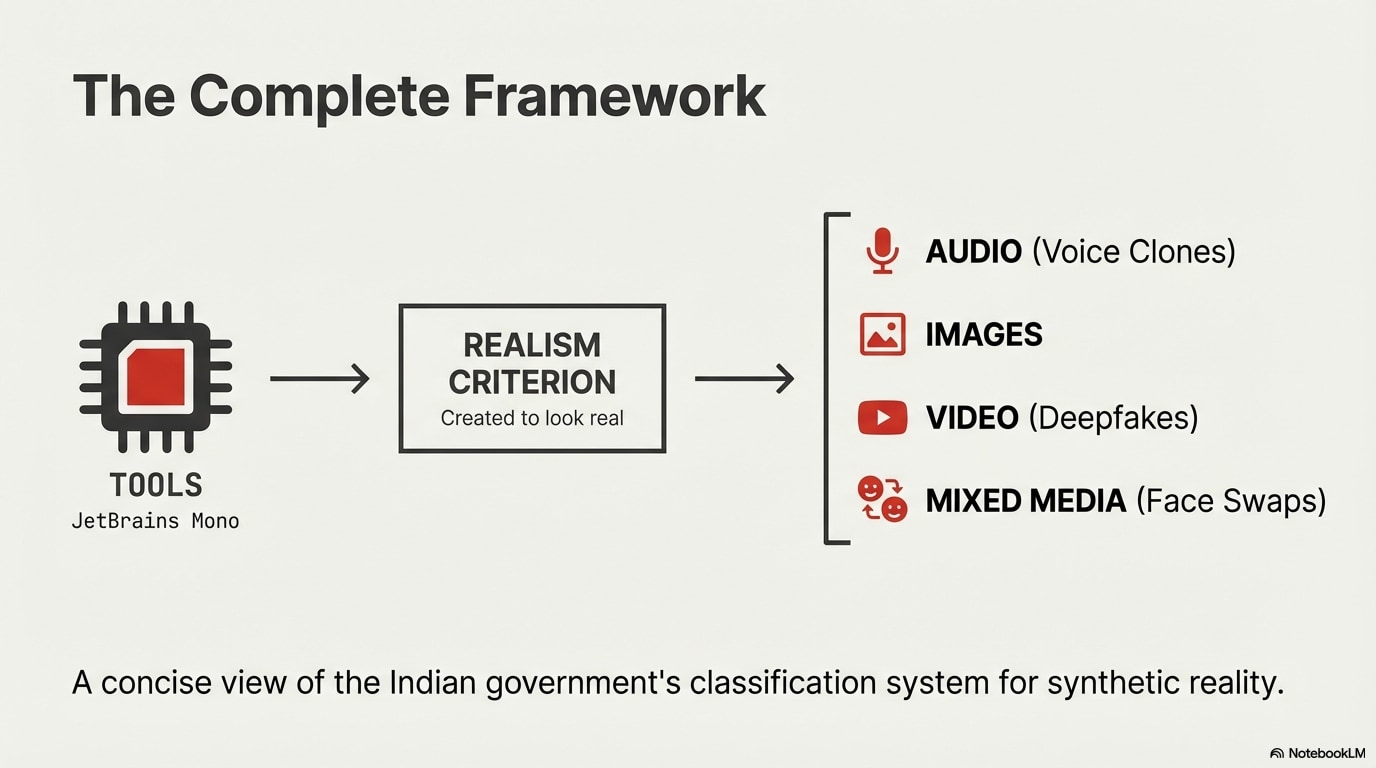

The Indian government has clearly defined what counts as an AI-generated or synthetically generated content. The content will include several categories that can produce the AI content, including audio, images, videos, or mixed media. These content are often created or changed using computer tools in a way that makes them look real. Content like deepfake videos, AI voice clones, and face swapped comes under this category.

The main focus will be on content that are misleading people by appearing authentic. Nevertheless, central government also clarified that color correction, subtitles, translations, compressions, and accessibility changes are not count under this new law. Importantly, this will be applied if these correction doesn’t change the original meaning. Illustrations used for training, research, or presentations are also excluded.

The central government has also listed some extra rules for large platforms like Facebook, Instagram, as they are informed to have stricter requirements.

These rules include-

One of the most noticeable changes for users will be labels on AI-generated posts. This rule will help users to identify synthetic content or machine-made content before sharing or engaging with it. You also need to confirm if AI tools were used while creating the content.

Giving false information can now lead to account action or legal consequences in serious cases.

The first draft rules were shared in 2025. This time, the central government has released final notification for platforms. They have until February 20, 2026 to comply with all the rules and regulations.