Written By Deepti Ratnam

Edited By: Deepti Ratnam | Published By: Deepti Ratnam | Published: Oct 23, 2025, 11:59 AM (IST)

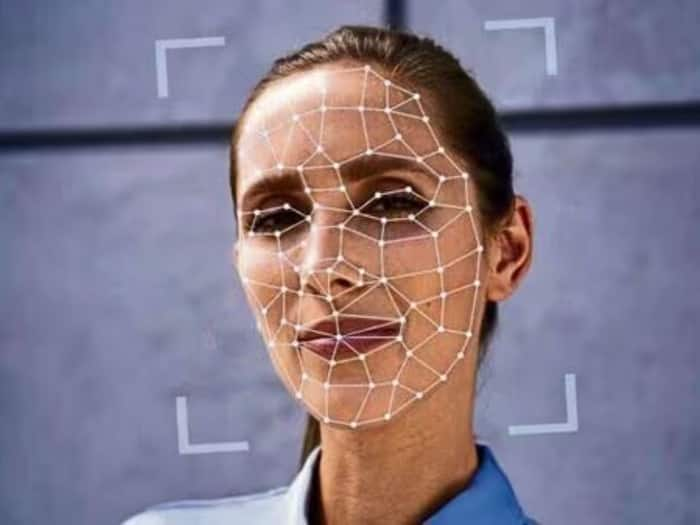

As artificial intelligence tools rapidly develop and can generate believable images, videos, and audio, Indian government is now planning to regulate the distribution of AI content online. The government has suggested some changes to the current IT policies to make sure that AI-generated content is marked so that users would not be misinformed or become victims of deepfakes or fraudulent news.

The proposed regulations put a greater burden on the large social media sites whose users are in the millions. Big giants like Facebook, YouTube, and WhatsApp will have to check the content made by AI, mark it as suitable, and avoid the spread of misleading content. It will also be necessary to preserve labels on AI content and not to change or delete them so that users can be aware of them.

IT Minister Ashwini Vaishnaw says, “In Parliament as well as many forums, there have been demands that something be done about deepfakes, which are harming society…people using some prominent person’s image, which then affects their personal lives, and privacy. Steps we have taken aim to ensure that users get to know whether something is synthetic or real. It is important that users know what they are seeing”.

Based on the suggested rules, all AI-generated materials should have identifying marks. In the case of videos, the first 10 percent of the clip should be covered with labels, whereas with images or graphics, a minimum of 10 percent of the visual area should recognize the presence of AI intervention. Users that post synthetic media will need to verify it is a creation of AI and platforms will need technical tools to authenticate these claims before the content becomes viral.

Generative AI has made it increasingly easy to produce highly realistic yet fake content. Deepfakes and fake media have the ability to control the opinion of the people, misrepresent the news, or impersonate someone to make money or to be politically influential. Cases of AI-created misinformation have already been experienced in India, which resulted in legal proceedings and judicial orders, and it reflects the necessity of more stringent regulation.

The rules target AI-generated content intended for public dissemination. Even the messaging systems such as WhatsApp might be obliged to do something should synthetic media come to their attention and ensure that it does not circulate any further. The amendments also categorize AI-generated content as the content produced or modified by applying computer algorithms but seem to be of real-life to the viewers.

The new steps will make AI technologies more accountable, minimize their misuse, and allow the user to distinguish between genuine and synthetic media. Labeling will enable the government to make the online ecosystem more secure, whereas the social media websites will need to implement more appropriate monitoring and verification procedures.