Written By Shubham Verma

Published By: Shubham Verma | Published: Mar 19, 2024, 09:06 AM (IST)

Nvidia’s Chief Executive Jensen Huang on Monday kicked off the company’s annual developer conference with a series of announcements aimed at maintaining the company’s leading position in the artificial intelligence industry. Standing on a stage in Silicon Valley, Huang introduced the company’s latest chip, which is 30 times faster than its predecessor for some tasks. He also revealed a new set of software tools designed to help developers sell AI models more easily to companies that use technology from Nvidia. Nvidia’s customers include most of the world’s largest technology firms. Also Read: Nvidia’s Arm-powered gaming laptop might be developed in collaboration with Alienware

At the conference, Nvidia’s chip and software announcements will determine whether the company can maintain its 80 percent share of the market for AI chips. Huang, wearing his signature leather jacket, joked that the day’s keynote would be full of dense math and science and that it was not a concert. Nvidia, once mostly known among computer gaming enthusiasts, has become a recognised leader in the tech industry, on par with giants like Microsoft. In its most recent fiscal year, Nvidia’s sales surpassed $60 billion, more than double its previous year. Also Read: NVIDIA DLSS 4 and RTX 5060 Unveiled at Computex 2025: Check Out the List of Titles Getting DLSS 4 Support

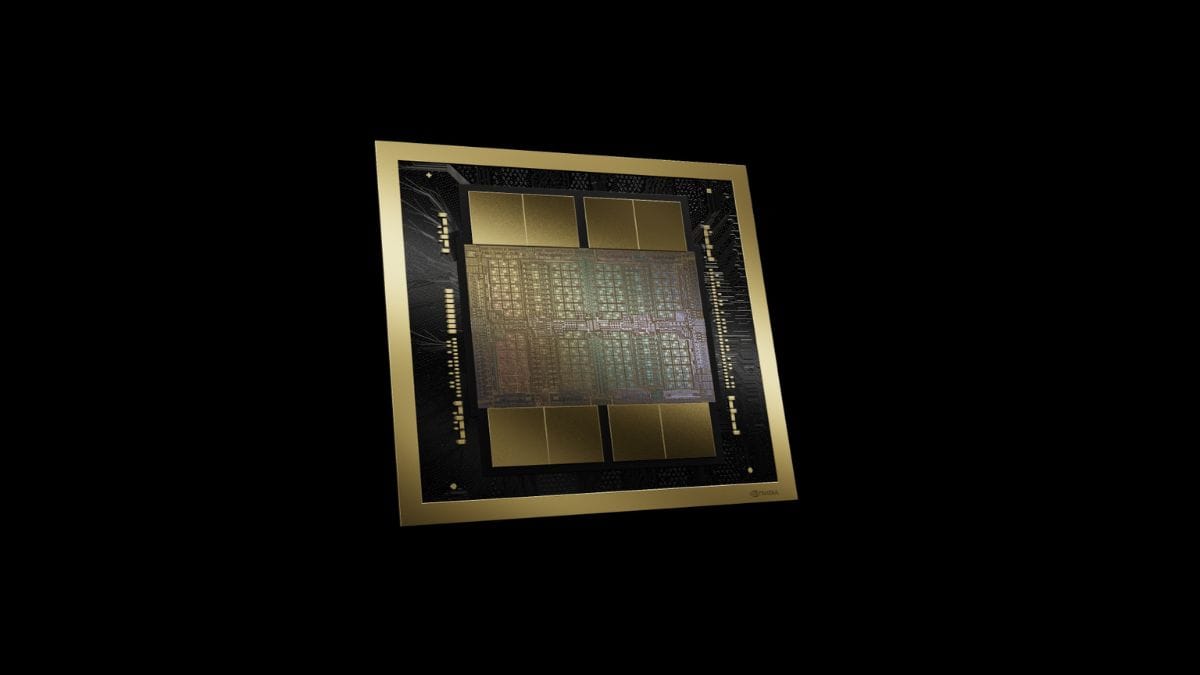

The new flagship chip called the Blackwell B200, takes two squares of silicon the size of the company’s previous offering and binds them together into a single component. The company claims it can offer up to 20 teraflops of FP4 horsepower using its 208 billion transistors. Huang didn’t give specific details about how well the chip performs when processing large amounts of data to train chatbots, which is the kind of work that has powered most of Nvidia’s soaring sales. He also didn’t reveal the price details.

Nvidia Blackwell B200 GPU

Nvidia is also shifting from selling single chips to selling total systems. Its latest iteration houses 72 of its AI chips and 36 central processors. It contains 600,000 parts in total and weighs 1,361kg. Its new GB200 ‘super’ chip combines two B200 GPUs along with a Grace CPU to deliver 30 times the performance for an LLM interference workload.

While many analysts expect Nvidia’s market share to decrease several percentage points in 2024 as new products from competitors come to market and Nvidia’s largest customers make their chips, the company has built a significant battery of software products too. The new software tools, called microservices, improve system efficiency across a wide variety of uses, making it easier for businesses to incorporate AI models into their work.

Nvidia also introduced a new line of chips designed for cars with new capabilities to run chatbots inside the vehicle. The company deepened its already-extensive relationships with Chinese automakers, saying that electric vehicle makers BYD and Xpeng will both use its new chips. Huang outlined a new series of chips for creating humanoid robots, inviting several of the robots made using the chips to join him on the stage. Nvidia’s major customers, including Amazon.com, Alphabet’s Google, Microsoft, OpenAI, and Oracle, are expected to use the new chip in cloud-computing services they sell, as well as for their own AI offerings.